IJHCS 2026 PDF

An Epistemic Network Analysis of Communication Strategies during Drawing-supported Spatial Dialogue in VR

Brandon Victor Syiem, Selen Türkay, Cael Gallagher, Christoph Schrank

Communicating spatial information is challenging using solely verbal or written language, and is often supported by non-verbal gestures and illustrative drawings. However, the growing need for communicating increasingly complex spatial information, coupled with the rise of remote collaboration, presents challenges that current screen-based solutions are ill-equipped to address. Virtual Reality (VR) offers capabilities to support both non-verbal gestures and complex visual aids, through embodied avatars and 3D virtual representations. However, the novelty of creating, referencing, and viewing 3D drawings in VR may influence the interlocutors’ actions, speech and communication performance. We conducted a mixed-methods within-subject study with dyads to investigate the effects of drawing dimension (2D or 3D drawings) on spatial dialogue behaviours in VR. We found no significant effects of drawing dimension on communication performance and workload, but found significantly different interlocutor actions and speech. We discuss relevant implications and highlight considerations unique to the different communication strategies observed during 2D and 3D drawing use for supporting spatial dialogue in VR.

BIT 2024 PDF

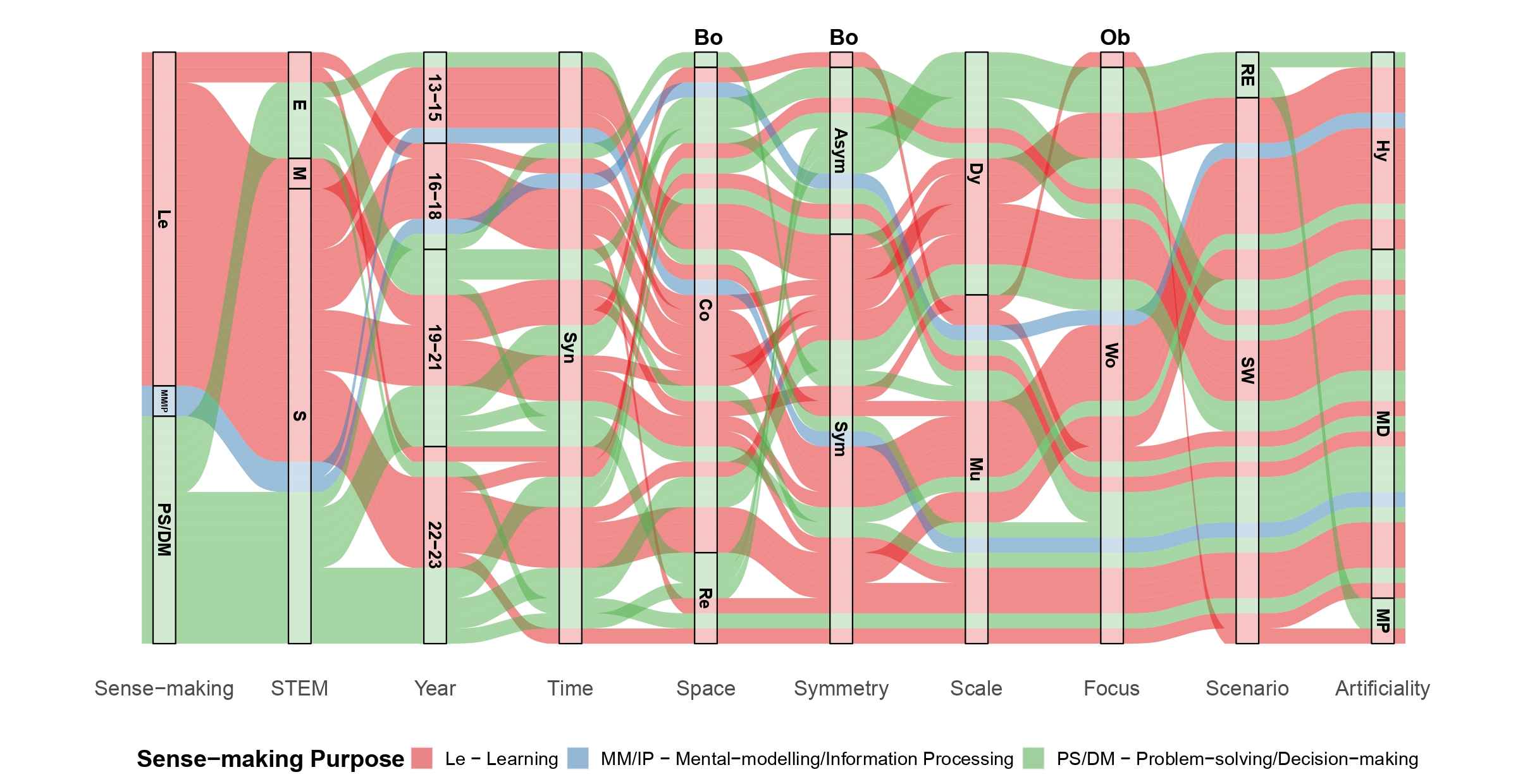

A Systematic Exploration of Collaborative Immersive Systems for Sense-making in STEM

Brandon V. Syiem, Selen Türkay

Scientific sense-making in STEM fields is a complex, yet essential activity, that greatly benefits from collaborations. However, challenges associated with collaboration, such as the geographic separation of experts, access to specialised equipment, and meaningful data representation, often hinder this process. Solutions to collaborative challenges have been extensively explored in CSCW and HCI literature. Among such solutions, immersive systems offer novel data visualisations, interactions, and representations that can support collaborative sense-making in STEM fields. Recognising the increasing interest from HCI researchers on the intersection of collaboration and immersive systems, we conduct a systematic review to answer pertinent questions regarding the research landscape, the design and implementation of collaborative immersive systems for STEM sense-making. We find that current research leans towards synchronous collaborations, AR technology, and sense-making for learning in science domains. We further discuss prevalent trends and considerations observed in our findings, to inform future research directions.

IJHCS 2024 PDF

Addressing Attentional Issues in Augmented Reality with Adaptive Agents: Possibilities and Challenges

Brandon Victor Syiem, Ryan M. Kelly, Tilman Dingler, Jorge Goncalves, Eduardo Velloso

Recent work on augmented reality (AR) has explored the use of adaptive agents to overcome attentional issues that negatively impact task performance. However, despite positive technical evaluations, adaptive agents have shown no significant improvements to user task performance in AR. Furthermore, previous works have primarily evaluated such agents using abstract tasks. In this paper, we develop an agent that observes user behaviour and performs appropriate actions to mitigate attentional issues in a realistic sense-making task in AR. We employ mixed methods to evaluate our agent in a between-subject experiment (N=60) to understand the agent’s effect on user task performance and behaviour. While we find no significant improvements in task performance, our analysis revealed that users’ preferences and trust in the agent affected their receptiveness of the agent’s recommendations. We discuss the pitfalls of autonomous agents and highlight the need to shift from designing better Human–AI interactions to better Human–AI collaborations.

CHI 2024 PDF

Augmented Reality at Zoo Exhibits: A Design Framework for Enhancing the Zoo Experience

Brandon V. Syiem, Sarah Webber, Ryan M. Kelly, Qiushi Zhou, Jorge Goncalves, Eduardo Velloso

Augmented Reality (AR) offers unique opportunities for contributing to zoos’ objectives of public engagement and education about animal and conservation issues. However, the diversity of animal exhibits pose challenges in designing AR applications that are not encountered in more controlled environments, such as museums. To support the design of AR applications that meaningfully engage the public with zoo objectives, we first conducted two scoping reviews to interrogate previous work on AR and broader technology use at zoos. We then conducted a workshop with zoo representatives to understand the challenges and opportunities in using AR to achieve zoo objectives. Additionally, we conducted a field trip to a public zoo to identify exhibit characteristics that impacts AR application design. We synthesise the findings from these studies into a framework that enables the design of diverse AR experiences. We illustrate the utility of the framework by presenting two concepts for feasible AR applications.

CHI 2024 PDF

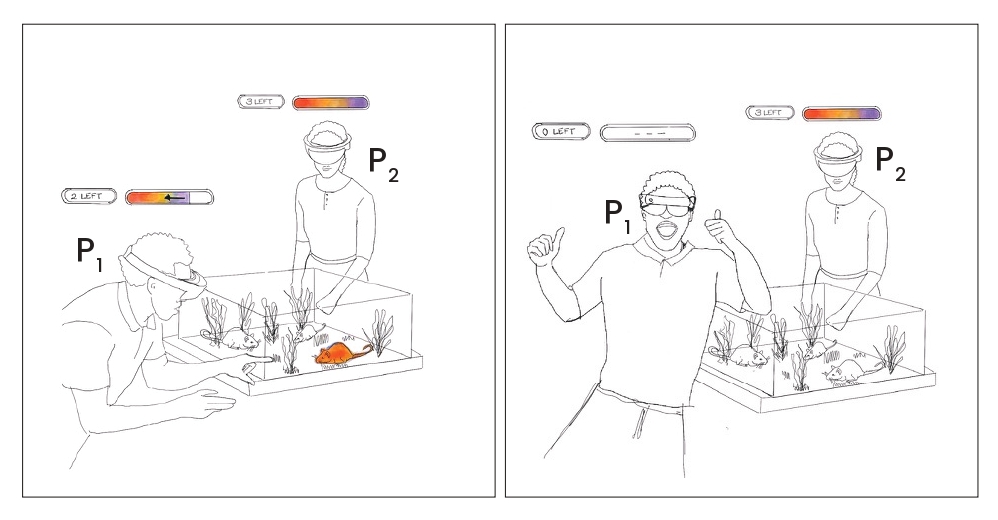

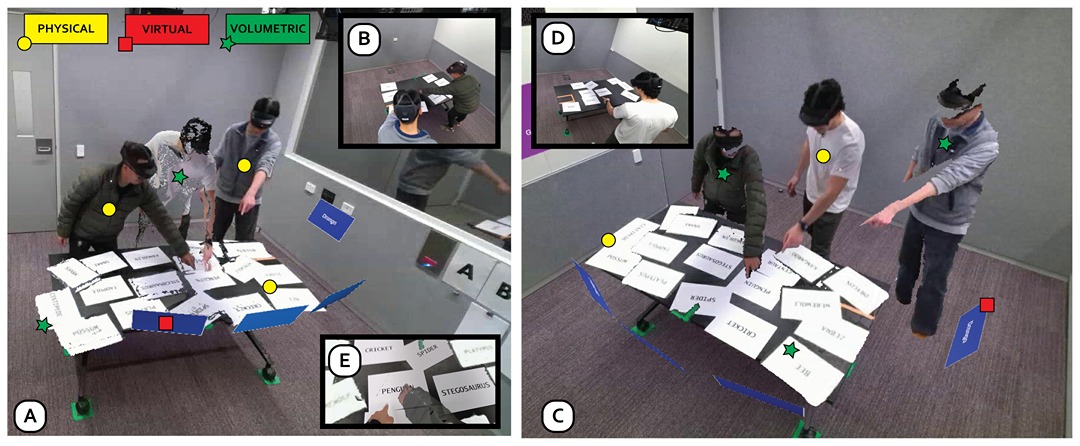

Volumetric Hybrid Workspaces: Interactions with Objects in Remote and Co-located Telepresence

Andrew Irlitti, Mesut Latifoglu, Thuong Hoang, Brandon Victor Syiem, Frank Vetere

Volumetric telepresence aims to create a shared space, allowing people in local and remote settings to collaborate seamlessly. Prior telepresence examples typically have asymmetrical designs, with volumetric capture in one location and objects in one format. In this paper, we present a volumetric telepresence mixed reality system that supports real-time, symmetrical, multi-user, partially distributed interactions, using objects in multiple formats, across multiple locations. We align two volumetric environments around a common spatial feature to create a shared workspace for remote and co-located people using objects in three formats: physical, virtual, and volumetric. We conducted a study with 18 participants over 6 sessions, evaluating how telepresence workspaces support spatial coordination and hybrid communication for co-located and remote users undertaking collaborative tasks. Our findings demonstrate the successful integration of remote spaces, effective use of proxemics and deixis to support negotiation, and strategies to manage interactivity in hybrid workspaces.

IMWUT 2024 PDF

Reflected Reality: Augmented Reality through the Mirror

Qiushi Zhou, Brandon Victor Syiem, Beier Li, Jorge Goncalves, Eduardo Velloso

We propose Reflected Reality: a new dimension for augmented reality that expands the augmented physical space into mirror reflections. By synchronously tracking the physical space in front of the mirror and the reflection behind it using an AR headset and an optional smart mirror component, reflected reality enables novel AR interactions that allow users to use their physical and reflected bodies to find and interact with virtual objects. We propose a design space for AR interaction with mirror reflections, and instantiate it using a prototype system featuring a HoloLens 2 and a smart mirror. We explore the design space along the following dimensions: the user’s perspective of input, the spatial frame of reference, and the direction of the mirror space relative to the physical space. Using our prototype, we visualise a use case scenario that traverses the design space to demonstrate its interaction affordances in a practical context. To understand how users perceive the intuitiveness and ease of reflected reality interaction, we conducted an exploratory and a formal user evaluation studies to characterise user performance of AR interaction tasks in reflected reality. We discuss the unique interaction affordances that reflected reality offers, and outline possibilities of its future applications.

CEXR 2023 PDF

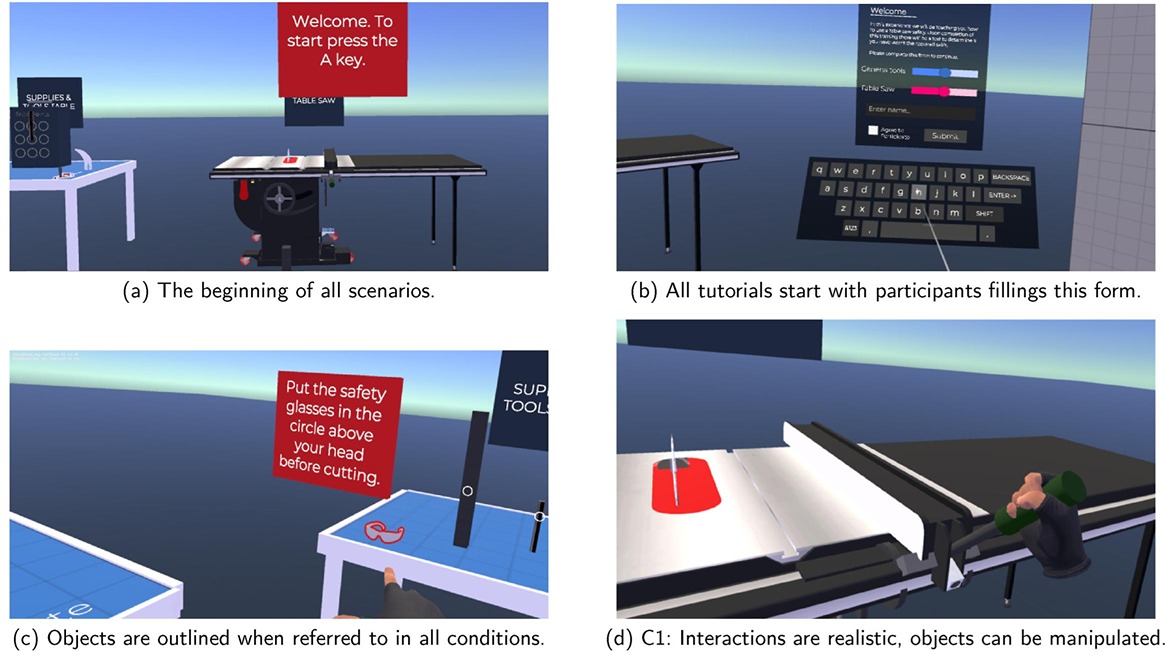

Hands-on or Hands-off: Deciphering the Impact of Interactivity on Embodied Learning in VR

Sara Khorasani, Brandon Victor Syiem, Sadia Nawaz, Jarrod Knibbe, Eduardo Velloso

Studies suggest that Sense of Embodiment (SoE) enabled by VR promotes embodied and active learning. However, it is unclear what features of VR learning environments tap into the concept of embodied learning. For example, interaction techniques, movement and purely observational scenarios in VR can all play a role in facilitating embodied learning. To understand how these mechanisms impact learning, we conducted 2 studies with a total of 64 participants who had no prior experience in the training task. Participants were taught how to use a table saw in 4 conditions and were tested on their task performance in a fully interactive VR assessment. The conditions were analyzed in pairs; 2 conditions with different interaction techniques, 2 conditions with differing ability to move and a cross-study analysis comparing conditions with purely observational learning to interactive learning. We used a mixed methods approach; Analysis of Variance (ANOVA), pairwise comparison of the learning outcomes in each condition as well as thematic analysis of the interview results. We found that some types of “hands-on” interactions can have a detrimental impact on learning and that observational learning can be as impactful as a fully interactive experience. Based on participant interviews, we explored how these mechanisms of the learning environment can impact participants’ learning ability.

CHI 2023 PDF

Modeling Temporal Target Selection: A Perspective from Its Spatial Correspondence

Difeng Yu, Brandon Victor Syiem, Andrew Irlitti, Tilman Dingler, Eduardo Velloso, Jorge Goncalves

Temporal target selection requires users to wait and trigger the selection input within a bounded time window, with a selection cursor that is expected to be delayed. This task conceptualizes, for example, a variety of game scenarios such as determining the timing of shooting a projectile towards a moving object. In this work, we explore models that predict “when” users typically perform a selection (i.e., user selection distribution) and their selection error rates in such tasks. We hypothesize that users react to temporal factors including “distance”, “width”, and “delay” as how they treat the corresponding variables in spatial target selection. The derived models are evaluated in a controlled experiment and an MTurk-based online study. Our research contributes new knowledge on user behavior in temporal target selection tasks and its potential connection with its spatial correspondence. Our models and conclusions can benefit both users and designers of relevant interactive applications.

Best Paper Award

CHI 2021 PDF

Impact of task on attentional tunneling in handheld augmented reality

Brandon Victor Syiem, Ryan M. Kelly, Jorge Goncalves, Eduardo Velloso, Tilman Dingler

Attentional tunneling describes a phenomenon in Augmented Reality (AR) where users excessively focus on virtual content while neglecting their physical surroundings. This leads to the concern that users could neglect hazardous situations when using AR applications. However, studies have often confounded the role of the virtual content with the role of the associated task in inducing attentional tunneling. In this paper, we disentangle the impact of the associated task and of the virtual content on the attentional tunneling effect by measuring reaction times to events in two user studies. We found that presenting virtual content did not significantly increase user reaction times to events, but adding a task to the content did. This work contributes towards our understanding of the attentional tunneling effect on handheld AR devices, and highlights the need to consider both task and context when evaluating AR application usage.

ISMAR 2020 PDF

Enhancing Visitor Experience or Hindering Docent Roles: Attentional Issues in Augmented Reality Supported Installations

Brandon Victor Syiem, Ryan M. Kelly, Eduardo Velloso, Jorge Goncalves, Tilman Dingler

Studies using augmented reality (AR) technology have suggested that users focus excessively on the virtual content in the AR environment at the expense of the physical world around them. This has implications related to the design of installations that aim to incorporate the user’s physical environment as part of the AR experience. To better understand how user attention is managed in an AR environment, we present an observational study of Rewild Our Planet, a multi-modal installation that combined video, audio, a human docent and mobile AR to promote awareness about environmental issues. We found that, while AR was successful in engaging visitors, it drew attention away from other modalities within the installation. This impacts the work of the human docent and affects how visitors absorb information presented in the installation. Based on these observations, we present guidelines to inform the design of future AR-supported installations with the aim of minimizing or taking advantage of the observed attentional issues.